Attention Is All You Need

Ashish Vaswani, Noam Shazeer et al.

Introduction

- Dominant models in sequence transduction use complex recurrent or convolutional neural networks.

- The proposed Transformer relies solely on attention mechanisms, eliminating recurrence and convolutions.

- Transformers are more parallelizable, requiring less training time with superior translation quality.

Background

- Current models are limited by sequential computation, which restricts parallelization.

- Attention mechanisms allow for modeling dependencies regardless of their distance in sequences.

- The Transformer model solely uses self-attention for sequence representation, not relying on RNNs or convolution.

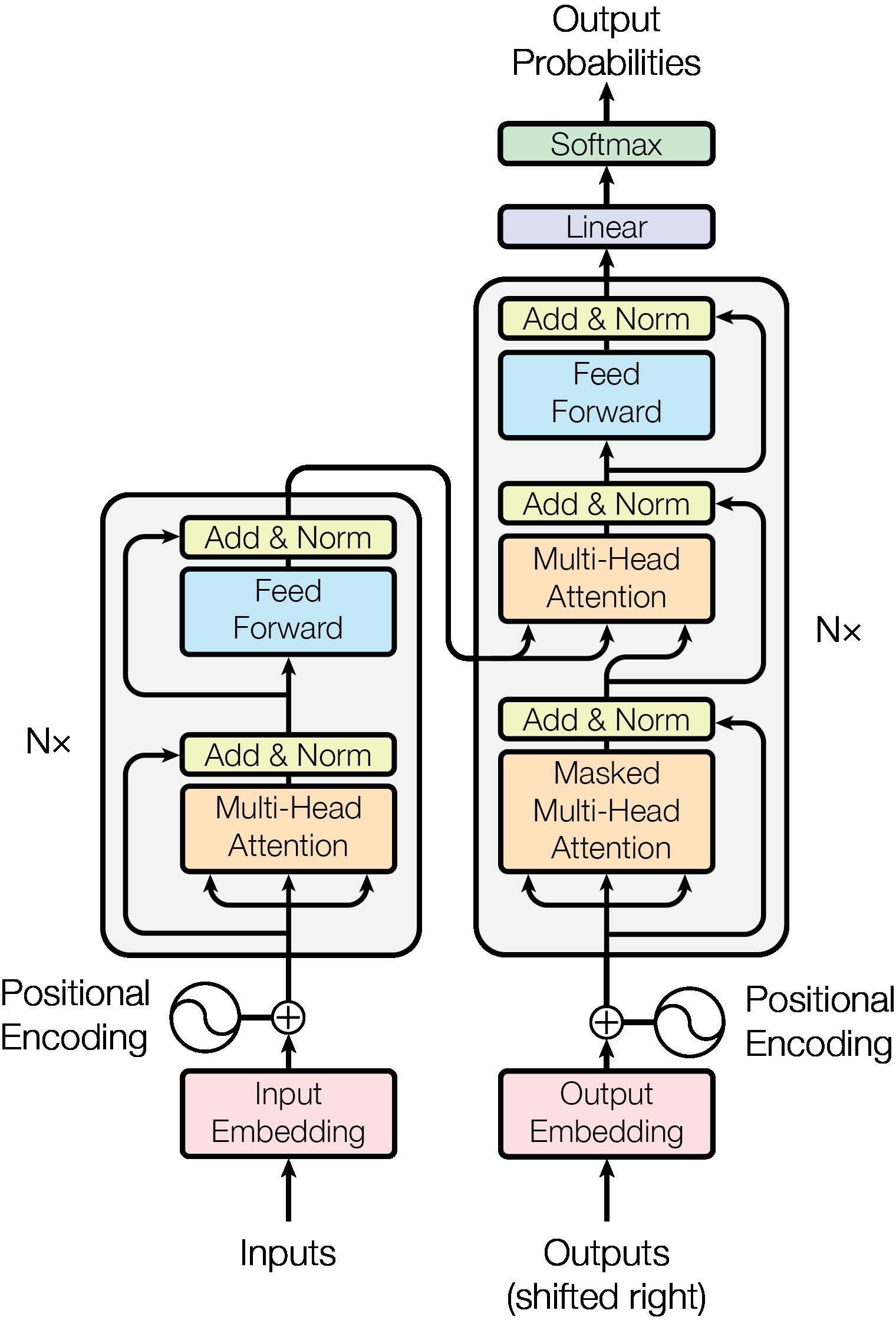

Model Architecture

- The Transformer has an encoder-decoder structure using stacked self-attention and fully connected layers.

- Encoder and decoder consist of N = 6 identical layers with residual connections and layer normalization.

Encoder and Decoder Stacks

- The encoder has multi-head self-attention and a position-wise feedforward network.

- The decoder adds a third sub-layer for multi-head attention over encoder output, with masking to avoid attention to future positions.

Attention Mechanism

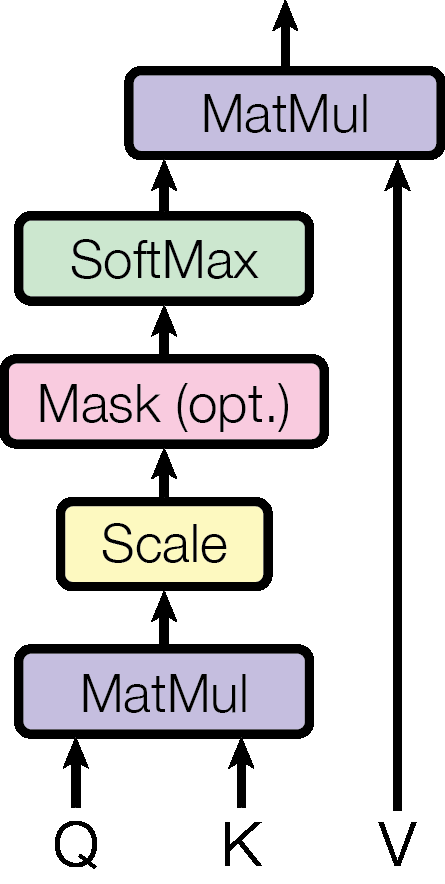

- Attention function maps a query and key-value pairs to an output.

- Scaled Dot-Product Attention uses softmax on normalized dot products for weights.

Scaled Dot-Product Attention

- Uses queries (Q), keys (K), and values (V) matrices to compute outputs.

- Formula: $$ \text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^T}{\sqrt{d_k}}\right)V $$